Software written in C.

I've been wanting to get into microcontroller programming for a while now, and last week I broke down and ordered components for a breadboard Arduino from Mouser. There's a fair amount of buzz about the Arduino platform, but I find the whole sketch infrastucture confusing. I'm a big fan of command line tools in general, so the whole IDE thing was a bit of a turn off.

Because the ATMega328 doesn't have a USB controller, I also bought a Teensy 2.0 from PJRC. The Teensy is just an ATMega32u4 on a board with supporting hardware (clock, reset switch, LED, etc). I've packed the Teensy programmer and HID listener in my Gentoo overlay, to make it easier to install them and stay up to date.

Arduinos (and a number of similar projects) are based on AVR microcontrollers like the ATMegas. Writing code for an AVR processor is the similar to writing code for any other processor. GCC will cross-compile your code once you've setup a cross-compiling toolchain. There's a good intro to the whole embedded approach in the Gentoo Embedded Handbook.

For all the AVR-specific features you can use AVR-libc, an open source C library for AVR processors. It's hard to imagine doing anything interesting without using this library, so you should at least skim through the manual. They also have a few interesting demos to get you going.

AVR-libc sorts chip-support code into AVR architecture subdirectories.

For example, object code specific to my ATMega32u4 is installed at

/usr/avr/lib/avr5/crtm32u4.o. avr5 is the AVR architecture

version of this chip.

Crossdev

Since you will probably not want to build a version of GCC that runs on your AVR chip, you'll be building a cross comiling toolchain. The toolchain will allow you to use your development box to compile programs for your AVR chip. On Gentoo, the recommended approach is to use crossdev to build the toolchain (although crossdev's AVR support can be flaky). They suggest you install it in a stage3 chroot to protect your native toolchain, but I think it's easier to just make btrfs snapshots of my hard drive before doing something crazy. I didn't have any trouble skipping the chroot on my sytem, but your mileage may vary.

# emerge -av crossdev

Because it has per-arch libraries (like avr5), AVR-libc needs to be

built with multilib support. If you (like me) have avoided

multilib like the plague so far, you'll need to patch crossdev to turn

on multilib for the AVR tools. Do this by applying Jess'

patch from bug 377039.

# wget -O crossdev-avr-multilib.patch 'https://bugs.gentoo.org/attachment.cgi?id=304037'

# patch /usr/bin/crossdev < crossdev-avr-multilib.patch

If you're using a profile where multilib is masked

(e.g. default/linux/x86/10.0/desktop) you should use Niklas'

extended version of the patch from the duplicate bug

378387.

Despite claiming to use the last overlay in PORTDIR_OVERLAY,

crossdev currently uses the first, so if you use

layman to manage your overlays (like mine),

you'll want to tweak your make.conf to look like:

source /var/lib/layman/make.conf

PORTDIR_OVERLAY="/usr/local/portage ${PORTDIR_OVERLAY}"

Now you can install your toolchain following the Crossdev wiki. First install a minimal GCC (stage 1) using

# USE="-cxx -openmp" crossdev --binutils 9999 -s1 --without-headers --target avr

Then install a full featured GCC (stage 4) using

# USE="cxx -nocxx" crossdev --binutils 9999 -s4 --target avr

I use binutils-9999 to install live from the git mirror,

which avoids a segfault bug in binutils 2.22.

After the install, I was getting bit by bug 147155:

cannot open linker script file ldscripts/avr5.x

Which I work around with:

# ln -s /usr/x86_64-pc-linux-gnu/avr/lib/ldscripts /usr/avr/lib/ldscripts

Now you're ready. Go forth and build!

Cross compiler construction

Why do several stages of GCC need to be built anyway? From crossdev

--help, here are the stages:

- Build just binutils

- Also build a bare C compiler (no C library/C++/shared GCC libs/C++ exceptions/etc…)

- Also build kernel headers

- Also build the C library

- Also build a full compiler

This example shows the details of linking a simple program from three

source files. There are three ways to link: directly from object

files, statically from static libraries, or dynamically from shared

libraries. If you're following along in my example

source, you can compile the three flavors of the

hello_world program with:

$ make

And then run them with:

$ make run

Compiling and linking

Here's the general compilation process:

- Write code in a human-readable language (C, C++, …).

- Compile the code to object files (

*.o) using a compiler (gcc,g++, …). - Link the code into executables or libraries using a linker

(

ld,gcc,g++, …).

Object files are binary files containing machine code versions of the human-readable code, along with some bookkeeping information for the linker (relocation information, stack unwinding information, program symbols, …). The machine code is specific to a particular processor architecture (e.g. x86-64).

Linking files resolves references to symbols defined in translation

units, because a single object file will rarely (never?) contain

definitions for all the symbols it requires. It's easy to get

confused about the difference between compiling and linking, because

you often use the same program (e.g. gcc) for both steps. In

reality, gcc is performing the compilation on its own, but is

using external utilities like ld for the linking. To see this in

action, add the -v (verbose) option to your gcc (or g++)

calls. You can do this for all the rules in the Makefile with:

make CC="gcc -v" CXX="g++ -v"

On my system, that shows g++ using /lib64/ld-linux-x86-64.so.2

for dynamic linking. On my system, C++ seems to require at least some

dynamic linkning, but a simple C program like simple.c can be

linked statically. For static linking, gcc uses collect2.

Symbols in object files

Sometimes you'll want to take a look at the symbols exported and

imported by your code, since there can be subtle bugs if you

link two sets of code that use the same symbol for different purposes.

You can use nm to inspect the intermediate object files. I've saved

the command line in the Makefile:

$ make inspect-object-files

nm -Pg hello_world.o print_hello_world.o hello_world_string.o

hello_world.o:

_Z17print_hello_worldv U

main T 0000000000000000 0000000000000010

print_hello_world.o:

_Z17print_hello_worldv T 0000000000000000 0000000000000027

_ZNSolsEPFRSoS_E U

_ZNSt8ios_base4InitC1Ev U

_ZNSt8ios_base4InitD1Ev U

_ZSt4cout U

_ZSt4endlIcSt11char_traitsIcEERSt13basic_ostreamIT_T0_ES6_ U

_ZStlsISt11char_traitsIcEERSt13basic_ostreamIcT_ES5_PKc U

__cxa_atexit U

__dso_handle U

hello_world_string U

hello_world_string.o:

hello_world_string R 0000000000000010 0000000000000008

The output format for nm is described in its man page. With the

-g option, output is restricted to globally visible symbols. With

the -P option, each symbol line is:

<symbol> <type> <offset-in-hex> <size-in-hex>

For example, we see that hello_world.o defines a global text

symbol main with at position 0 with a size of 0x10. This is where

the loader will start execution.

We also see that hello_world.o needs (i.e. “has an undefineed symbol

for”) _Z17print_hello_worldv. This means that, in order to run,

hello_world.o must be linked against something else which provides

that symbol. The symbol is for our print_hello_world function. The

_Z17 prefix and v postfix are a result of name

mangling, and depend on the compiler used and function

signature. Moving on, we see that print_hello_world.o defines the

_Z17print_hello_worldv at position 0 with a size of 0x27. So

linking print_hello_world.o with hello_world.o would resolve the

symbols needed by hello_world.o.

print hello_world.o has undefined symbols of its own, so we can't

stop yet. It needs hello_world_string (provided by

hello_world_string.o), _ZSt4cout (provided by libcstd++),

….

The process of linking involves bundling up enough of these partial code chunks so that each of them has access to the symbols it needs.

There are a number of other tools that will let you poke into the

innards of object files. If nm doesn't scratch your itch, you may

want to look at the more general objdump.

Storage classes

In the previous section I mentioned “globally visible symbols”. When you declare or define a symbol (variable, function, …), you can use storage classes to tell the compiler about your symbols' linkage and storage duration.

For more details, you can read through §6.2.2 Linkages of identifiers, §6.2.4 Storage durations of objects, and §6.7.1 Storage-class specifiers in WG14/N1570, the last public version of ISO/IEC 9899:2011 (i.e. the C11 standard).

Since we're just worried about linking, I'll leave the discussion of

storage duration to others. With linkage, you're basically deciding

which of the symbols you define in your translation unit should be

visible from other translation units. For example, in

print_hello_world.h, we declare that there is a function

print_hello_world (with a particular signature). The extern

means that may be defined in another translation unit. For

block-level symbols (i.e. things defined in the root level of your

source file, not inside functions and the like), this is the default;

writing extern just makes it explicit. When we define the

function in print_hello_world.cpp, we also label it as extern

(again, this is the default). This means that the defined symbol

should be exported for use by other translation units.

By way of comparison, the string secret_string defined in

hello_world_string.cpp is declared static. This means that

the symbol should be restricted to that translation unit. In other

words, you won't be able to access the value of secret_string from

print_hello_world.cpp.

When you're writing a library, it is best to make any functions that

you don't need to export static and to avoid global variables

altogether.

Static libraries

You never want to code everything required by a program on your own.

Because of this, people package related groups of functions into

libraries. Programs can then take use functions from the library, and

avoid coding that functionality themselves. For example, you could

consider print_hello_world.o and hello_world_string.o to be

little libraries used by hello_world.o. Because the two object

files are so tightly linked, it would be convenient to bundle them

together in a single file. This is what static libraries are, bundles

of object files. You can create them using ar (from “archive”;

ar is the ancestor of tar, from “tape archive”).

You can use nm to list the symbols for static libraries exactly as

you would for object files:

$ make inspect-static-library

nm -Pg libhello_world.a

libhello_world.a[print_hello_world.o]:

_Z17print_hello_worldv T 0000000000000000 0000000000000027

_ZNSolsEPFRSoS_E U

_ZNSt8ios_base4InitC1Ev U

_ZNSt8ios_base4InitD1Ev U

_ZSt4cout U

_ZSt4endlIcSt11char_traitsIcEERSt13basic_ostreamIT_T0_ES6_ U

_ZStlsISt11char_traitsIcEERSt13basic_ostreamIcT_ES5_PKc U

__cxa_atexit U

__dso_handle U

hello_world_string U

libhello_world.a[hello_world_string.o]:

hello_world_string R 0000000000000010 0000000000000008

Notice that nothing has changed from the object file output, except

that object file names like print_hello_world.o have been replaced

by libhello_world.a[print_hello_world.o].

Shared libraries

Library code from static libraries (and object files) is built into

your executable at link time. This means that when the library is

updated in the future (bug fixes, extended functionality, …), you'll

have to relink your program to take advantage of the new features.

Because no body wants to recompile an entire system when someone makes

cout a bit more efficient, people developed shared libraries. The

code from shared libraries is never built into your executable.

Instead, instructions on how to find the dynamic libraries are built

in. When you run your executable, a loader finds all the shared

libraries your program needs and copies the parts you need from the

libraries into your program's memory. This means that when a system

programmer improves cout, your program will use the new version

automatically. This is a Good Thing™.

You can use ldd to list the shared libraries your program needs:

$ make list-executable-shared-libraries

ldd hello_world

linux-vdso.so.1 => (0x00007fff76fbb000)

libstdc++.so.6 => /usr/lib/gcc/x86_64-pc-linux-gnu/4.5.3/libstdc++.so.6 (0x00007ff7467d8000)

libm.so.6 => /lib64/libm.so.6 (0x00007ff746555000)

libgcc_s.so.1 => /lib64/libgcc_s.so.1 (0x00007ff74633e000)

libc.so.6 => /lib64/libc.so.6 (0x00007ff745fb2000)

/lib64/ld-linux-x86-64.so.2 (0x00007ff746ae7000)

The format is:

soname => path (load address)

You can also use nm to list symbols for shared libraries:

$ make inspect-shared-libary | head

nm -Pg --dynamic libhello_world.so

_Jv_RegisterClasses w

_Z17print_hello_worldv T 000000000000098c 0000000000000034

_ZNSolsEPFRSoS_E U

_ZNSt8ios_base4InitC1Ev U

_ZNSt8ios_base4InitD1Ev U

_ZSt4cout U

_ZSt4endlIcSt11char_traitsIcEERSt13basic_ostreamIT_T0_ES6_ U

_ZStlsISt11char_traitsIcEERSt13basic_ostreamIcT_ES5_PKc U

__bss_start A 0000000000201030

__cxa_atexit U

__cxa_finalize w

__gmon_start__ w

_edata A 0000000000201030

_end A 0000000000201048

_fini T 0000000000000a58

_init T 0000000000000810

hello_world_string D 0000000000200dc8 0000000000000008

You can see our hello_world_string and _Z17print_hello_worldv,

along with the undefined symbols like _ZSt4cout that our code

needs. There are also a number of symbols to help with the shared

library mechanics (e.g. _init).

To illustrate the “link time” vs. “load time” distinction, run:

$ make run

./hello_world

Hello, World!

./hello_world-static

Hello, World!

LD_LIBRARY_PATH=. ./hello_world-dynamic

Hello, World!

Then switch to the Goodbye definition in

hello_world_string.cpp:

//extern const char * const hello_world_string = "Hello, World!";

extern const char * const hello_world_string = "Goodbye!";

Recompile the libraries (but not the executables) and run again:

$ make libs

…

$ make run

./hello_world

Hello, World!

./hello_world-static

Hello, World!

LD_LIBRARY_PATH=. ./hello_world-dynamic

Goodbye!

Finally, relink the executables and run again:

$ make

…

$ make run

./hello_world

Goodbye!

./hello_world-static

Goodbye!

LD_LIBRARY_PATH=. ./hello_world-dynamic

Goodbye!

When you have many packages depending on the same low-level libraries, the savings on avoided rebuilding is large. However, shared libraries have another benefit over static libraries: shared memory.

Much of the machine code in shared libraries is static (i.e. it doesn't change as a program is run). Because of this, several programs may share the same in-memory version of a library without stepping on each others toes. With statically linked code, each program has its own in-memory version:

| Static | Shared |

|---|---|

| Program A → Library B | Program A → Library B |

| Program C → Library B | Program C ⎯⎯⎯⎯⬏ |

Further reading

If you're curious about the details on loading and shared libraries, Eli Bendersky has a nice series of articles on load time relocation, PIC on x86, and PIC on x86-64.

Cython is a Python-like language that makes it easy to write C-based extensions for Python. This is a Good Thing™, because people who will write good Python wrappers will be fluent in Python, but not necessarily in C. Alternatives like SWIG allow you to specify wrappers in a C-like language, which makes thin wrappers easy, but can lead to a less idomatic wrapper API. I should also point out ctypes, which has the advantage of avoiding compiled wrappers altogether, at the expense of dealing with linking explicitly in the Python code.

The Cython docs are fairly extensive, and I found them to be

sufficient for writing my pycomedi wrapper around the Comedi

library. One annoying thing was that Cython does not support

__all__ (cython-users). I took a stab at fixing this,

but got sidetracked cleaning up the Cython parser (cython-devel,

later in cython-devel). I must have bit off more than I

should have, since I eventually ran out of time to work on merging my

code, and the Cython trunk moved off without me ;).

SWIG is a Simplified Wrapper and Interface Generator. It makes it very easy to provide a quick-and-dirty wrapper so you can call code written in C or C++ from code written in another (e.g. Python). I don't do much with SWIG, because while building an object oriented wrapper in SWIG is possible, I could never get it to feel natural (I like Cython better). Here are my notes from when I do have to interact with SWIG.

%array_class and memory management

%array_class (defined in carrays.i) lets you wrap a C array in

a class-based interface. The example from the docs is nice and

concise, but I was running into problems.

>>> import example

>>> n = 3

>>> data = example.sample_array(n)

>>> for i in range(n):

... data[i] = 2*i + 3

>>> example.print_sample_pointer(n, data)

Traceback (most recent call last):

...

TypeError: in method 'print_sample_pointer', argument 2 of type 'sample_t *'

I just bumped into these errors again while trying to add an

insn_array class to Comedi's wrapper:

%array_class(comedi_insn, insn_array);

so I decided it was time to buckle down and figure out what was going on. All of the non-Comedi examples here are based on my example test code.

The basic problem is that while you and I realize that an

array_class-based instance is interchangable with the underlying

pointer, SWIG does not. For example, I've defined a sample_vector_t

struct:

typedef double sample_t;

typedef struct sample_vector_struct {

size_t n;

sample_t *data;

} sample_vector_t;

and a sample_array class:

%array_class(sample_t, sample_array);

A bare instance of the double array class has fancy SWIG additions for getting and setting attributes. The class that adds the extra goodies is SWIG's proxy class:

>>> print(data) # doctest: +ELLIPSIS

<example.sample_array; proxy of <Swig Object of type 'sample_array *' at 0x...> >

However, C functions and structs interact with the bare pointer

(i.e. without the proxy goodies). You can use the .cast() method to

remove the goodies:

>>> data.cast() # doctest: +ELLIPSIS

<Swig Object of type 'double *' at 0x...>

>>> example.print_sample_pointer(n, data.cast())

>>> vector = example.sample_vector_t()

>>> vector.n = n

>>> vector.data = data

Traceback (most recent call last):

...

TypeError: in method 'sample_vector_t_data_set', argument 2 of type 'sample_t *'

>>> vector.data = data.cast()

>>> vector.data # doctest: +ELLIPSIS

<Swig Object of type 'double *' at 0x...>

So .cast() gets you from proxy of <Swig Object ...> to <Swig

Object ...>. How you go the other way? You'll need this if you want

to do something extra fancy, like accessing the array members ;).

>>> vector.data[0]

Traceback (most recent call last):

...

TypeError: 'SwigPyObject' object is not subscriptable

The answer here is the .frompointer() method, which can function as

a class method:

>>> reconst_data = example.sample_array.frompointer(vector.data)

>>> reconst_data[n-1]

7.0

Or as a single line:

>>> example.sample_array.frompointer(vector.data)[n-1]

7.0

I chose the somewhat awkward name of reconst_data for the

reconstitued data, because if you use data, you clobber the earlier

example.sample_array(n) definition. After the clobber, Python

garbage collects the old data, and becase the old data claims it

owns the underlying memory, Python frees the memory. This leaves

vector.data and reconst_data pointing to unallocated memory, which

is probably not what you want. If keeping references to the original

objects (like I did above with data) is too annoying, you have to

manually tweak the ownership flag:

>>> data.thisown

True

>>> data.thisown = False

>>> data = example.sample_array.frompointer(vector.data)

>>> data[n-1]

7.0

This way, when data is clobbered, SWIG doesn't release the

underlying array (because data no longer claims to own the array).

However, vector doesn't own the array either, so you'll have to

remember to reattach the array to somthing that will clean it up

before vector goes out of scope to avoid leaking memory:

>>> data.thisown = True

>>> del vector, data

For deeply nested structures, this can be annoying, but it will work.

Available in a git repository.

Repository: sawsim

Browsable repository: sawsim

Author: W. Trevor King

Introduction

My thesis project investigates protein unfolding via the experimental technique of force spectroscopy. In force spectroscopy, we mechanically stretch chains of proteins, usually by pulling one end of the chain away from a surface with an AFM.

For velocity clamp experiments (the simplest to carry out experimentally), the experiments produce "sawtooth" force-displacement curves. As the protein stretches, the tension increases. At some point, a protein domain unfolds, increasing the total length of the chain and relaxing the tension. As we continue to stretch the protein, we see a series of unfolding peaks. The GPLed program Hooke analyzes the sawtooth curves and extracts lists of unfolding forces.

Lists of unfolding forces are not particularly interesting by themselves. The most common approach for extracting some physical insights from the unfolding curves is to take a guess at an explanatory model and check the predicted behavior of the model against the measured behavior of the protein. If the model does a good job of explaining the protein behavior, it might be what's actually going on behind the scenes. Sawsim is my (published!) tool for simulating force spectroscopy experiments and matching the simulations to experimental results.

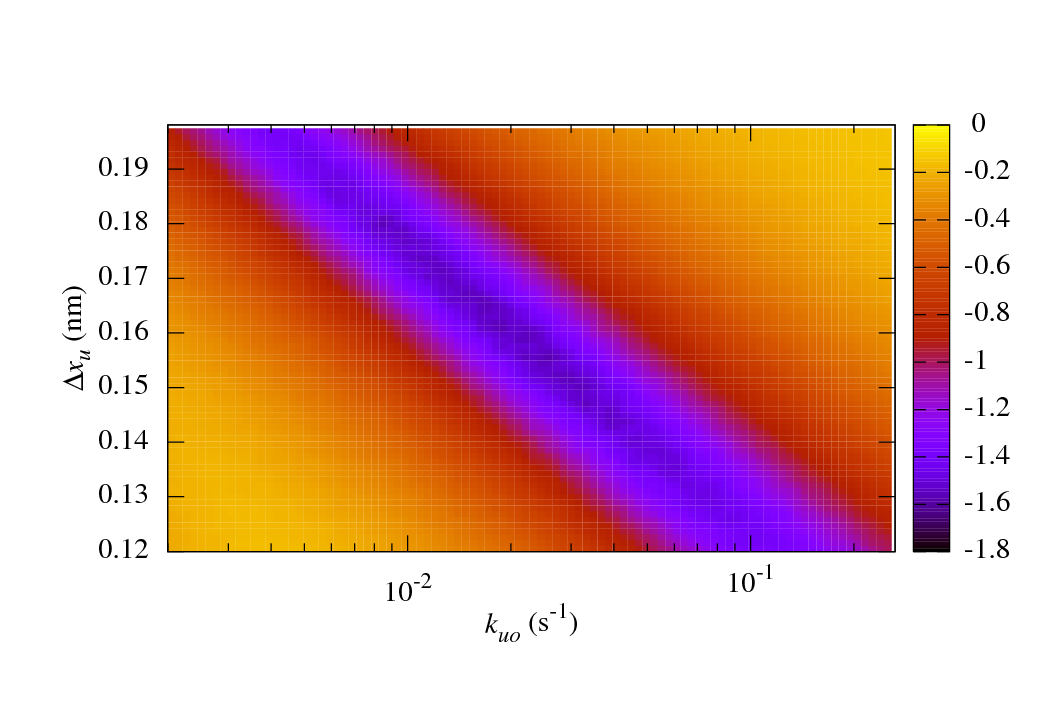

The main benefits of sawsim are its ability to simulate systems with arbitrary numbers of states (see the manual) and to easily compare the simulated data with experimental values. The following figure shows a long valley of reasonable fits to some ubiquitin unfolding data. See the IJBM paper (linked above) for more details.

Getting started

Sawsim should run anywhere you have a C compiler and Python 2.5+. I've tested it on Gentoo and Debian, and I've got an ebuild in my Gentoo overlay. It should also run fine on Windows, etc., but I don't have access to any Windows boxes with a C compiler, so I haven't tested that (email me if you have access to such a machine and want to try installing Sawsim).

See the README, manual, and PyPI page for more details.

C is the grandaddy of most modern programming languages.

There are several C tutorials available on-line. I would recommend Prof. McMillan's introduction and The C Book by Mike Banahan, Declan Brady, and Mark Doran, which has lots of well-explained examples to get you going.